Overview

Transform your digital characters with seamless, real-time lip synchronization that works completely offline and cross-platform! This plugin brings your MetaHuman and custom characters to life with zero-latency, real-time lip sync.

Three Advanced Models for Every Project Need

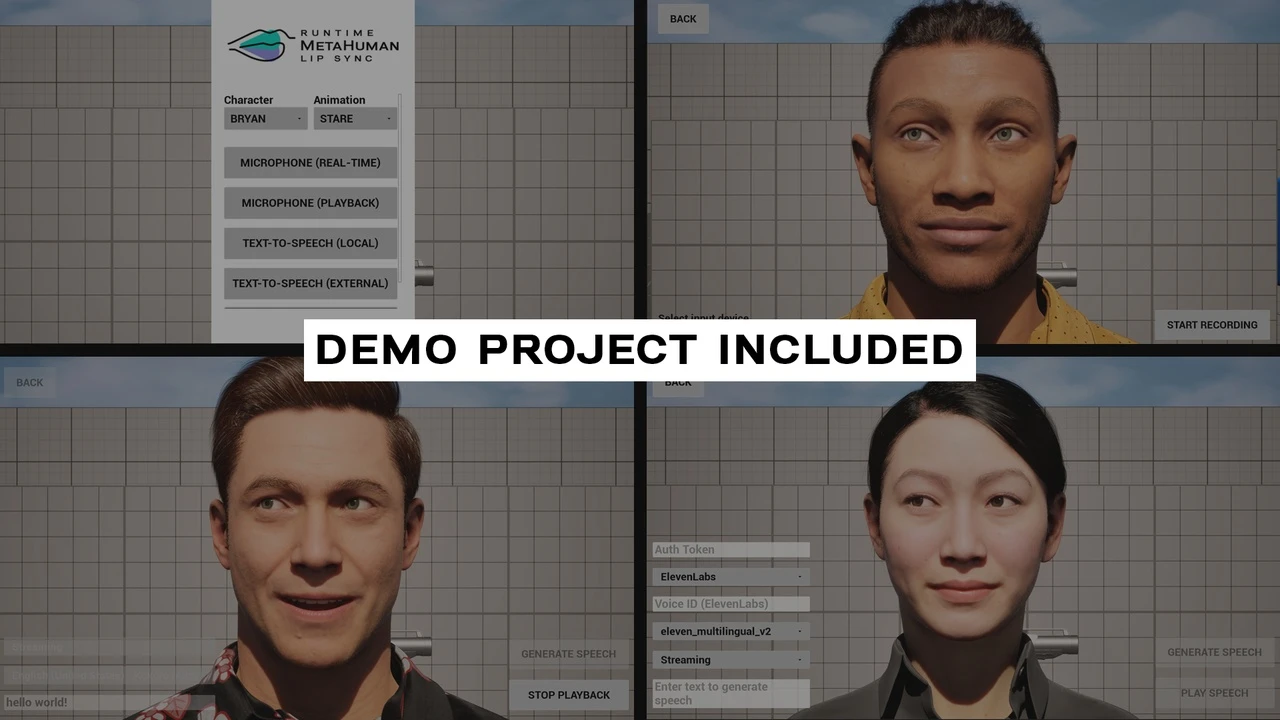

With three quality models to suit your project requirements, you can bring emotion-aware facial animation to your MetaHuman characters. Choose from:

- Mood-Enabled Realistic Model – Emotion-aware facial animation with 12 different moods and smart lookahead timing.

- Realistic Model – Enhanced visual fidelity with more natural mouth movements and 81 facial controls.

- Standard Model – Broad compatibility with MetaHumans and custom characters, supporting 14 visemes.

Key Features

Real-time Lip Sync from microphone input and any other audio sources, Emotional Expression Control, Dynamic laughter animations, Pixel Streaming microphone support, Offline Processing, Cross-platform Compatibility, Optimized for real-time performance, Works with both MetaHuman and custom characters, Multiple Audio Sources, Works great with Runtime Audio Importer, Runtime Text To Speech, and Runtime AI Chatbot Integrator.

Included Formats

This plugin is compatible with various formats including Unreal Engine, MetaHuman, and custom character models.

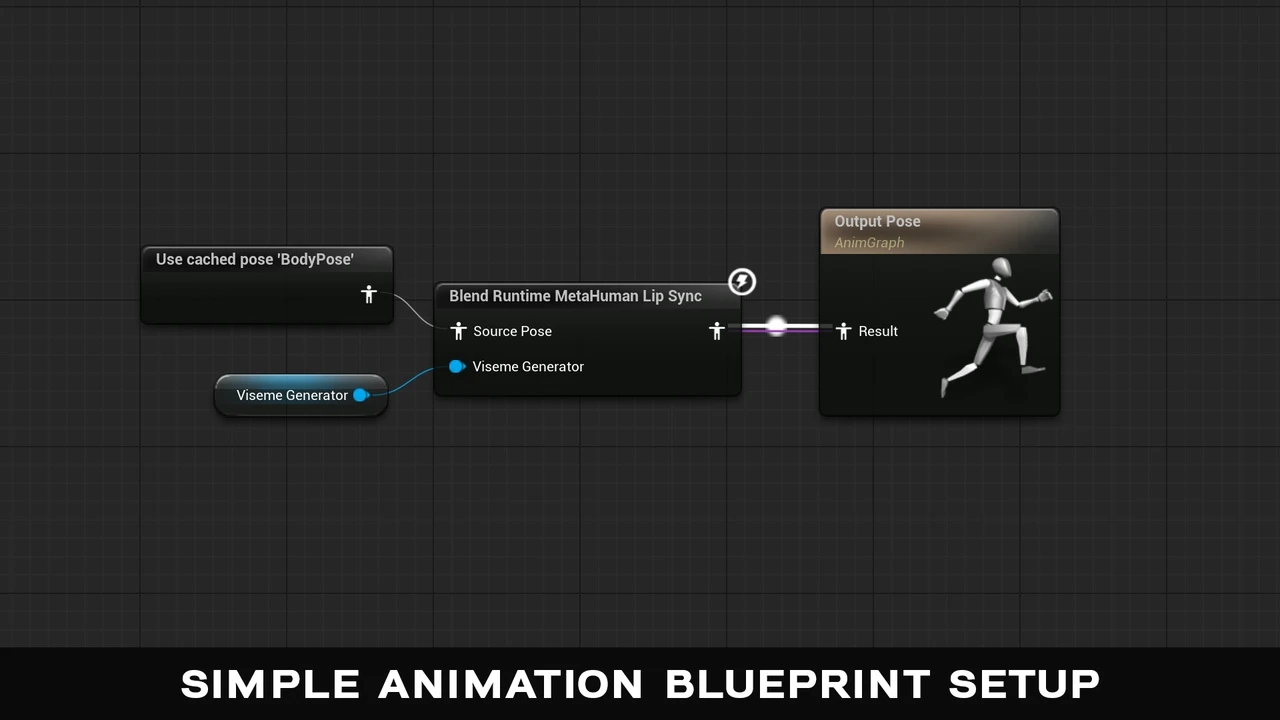

Technical Details

The plugin provides real-time lip synchronization by processing audio input to generate visemes. It relies on the onnxruntime library as the cross-platform native machine learning accelerator for lip sync inference.

Click here to view the full details of the resource.:URL

Related Articles:

Narrative Tales – Node Based Quests and Dialogue

Click the button below to download.

Download: